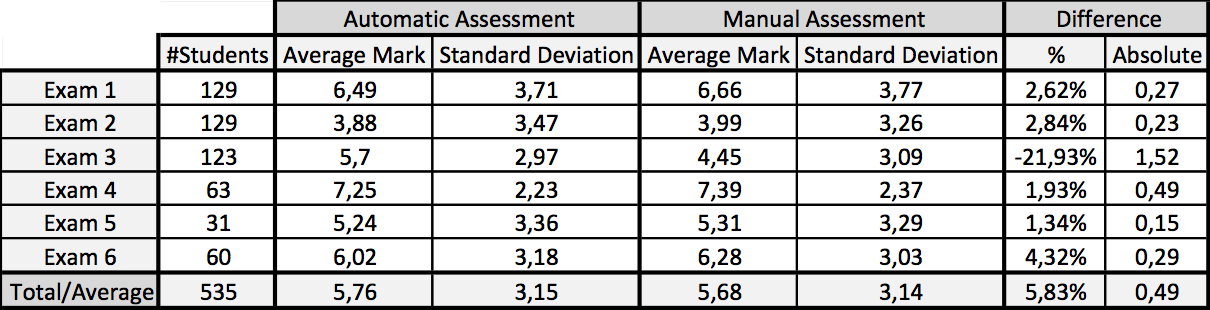

ASys has been tested in real courses at the Universitat Politècnica de València (UPV). The study was performed by several teachers. Six Java exams of a second-year programming course where five teachers and 535 students has been considered in this study.

The six exams were similar: class models composed of seven to nine classes. The assessment criteria were codified with 26-30 properties that were specified with the DSL. Then, the assessment template was automatically generated. All exams were distributed among the teachers, who assessed them manually. Six months later, the same teachers assessed the exams again with ASys. ASys performed almost half of the assessment work automatically. ASys detected 100% of the errors and property violations. 48% of them were automatically corrected and marked by ASys (many students’ exams were fully automatically marked, while others were only partially automatically marked). In the other 52% cases, ASys identified the error and showed the teacher’s solution and the assessment criteria (thus speeding up the manual assessment), but the teacher was the one who finally decided the mark. The semi-automatic assessment was more accurate. As an average, the semi-automatic assessment mark was |0.49| over 10 points more accurate.

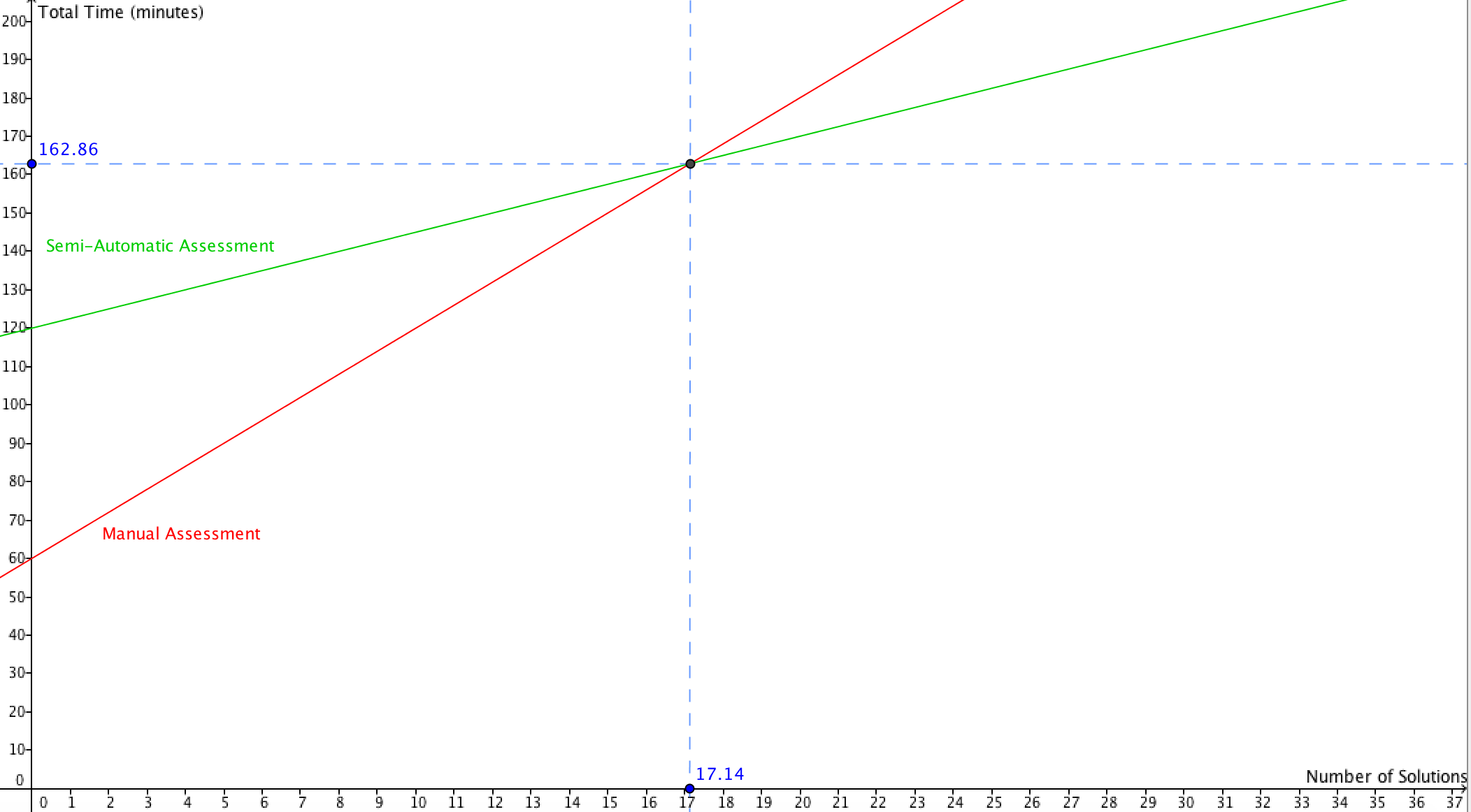

Additionally, a time comparison study between both corrections was performed. The study revealed that teachers invested one extra hour (as an average) in the preparation of the exam (instead of one, they used two hours). Therefore, the total time invested in the preparation was two hours. However, in the assessment of exams, half of the work was done automatically, and the other half was significantly faster because the detection of the error was automatic, the exact file already opened and the line highlighted, and the teacher only had to correct it having the teacher’s solution already opened, and in most cases, having available in a listbox the corrections already made for the same error of previous students. In total, the manual assessment invested 6 minutes per exam, while the automatic assessment invested 2.5 minutes. Therefore, the total time invested in the manual assessment was 6 minutes * 535 exams = 53.5 hours, while in the automatic assessment it was 2.5 minutes * 535 exams = 22.3 hours (31.2 hours less). Considering both the exam preparation and the assessment times, the automatic assessment saved 25.2 hours (47.1% of the total assessment time was saved).

This experiment allows us to approximate the relationship between manual assessment and ASys assessment. This relation is captured by the equation: (2*P)+(N*0.42*A), being P the time needed to prepare an exam, N the number of student solutions to assess, and A the time needed to manually assess an individual student solution. Thus, the total time in the manual assessment is: P+(N*A). Clearly, the time needed grows linearly with N, and thus for large courses the ASys assessment can save a lot of time. Therefore, the ASys assessment starts to save time with groups bigger than 17 students.