The manual assessment is error-prone

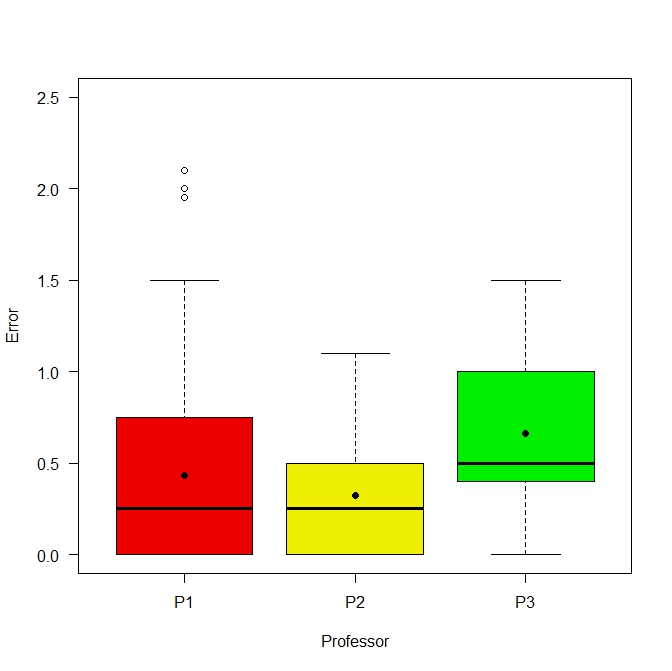

An study performed at UPV evaluated the errors made in the manual assessment by university teachers. It concluded that:- The average error was |0.49| points out of 10.

- The error was 75% of the times positive (the student was benefitted) and 25% of the times negative (the student was harmed).

|

|

|

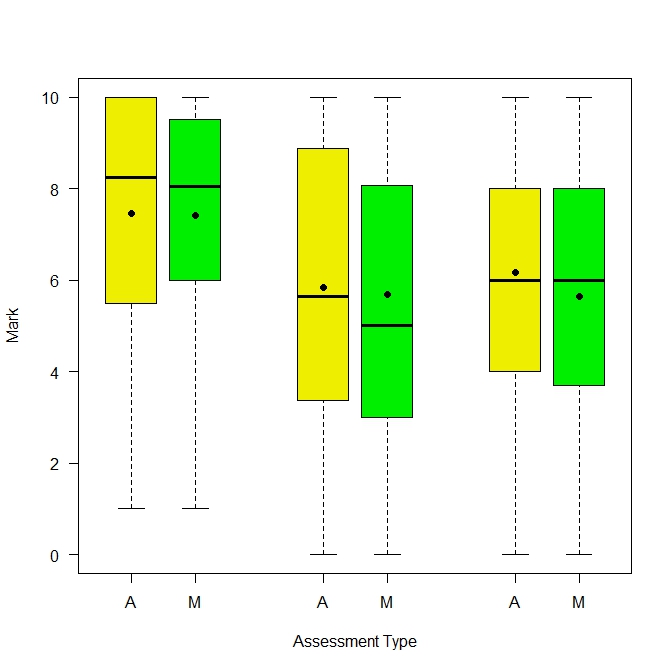

In these figures we see the distribution of marks in the same exam obtained by three different teachers (left figure). The yellow box represents the marks obtained with semi-automatic assessment, while the green box represents the marks obtained manually.

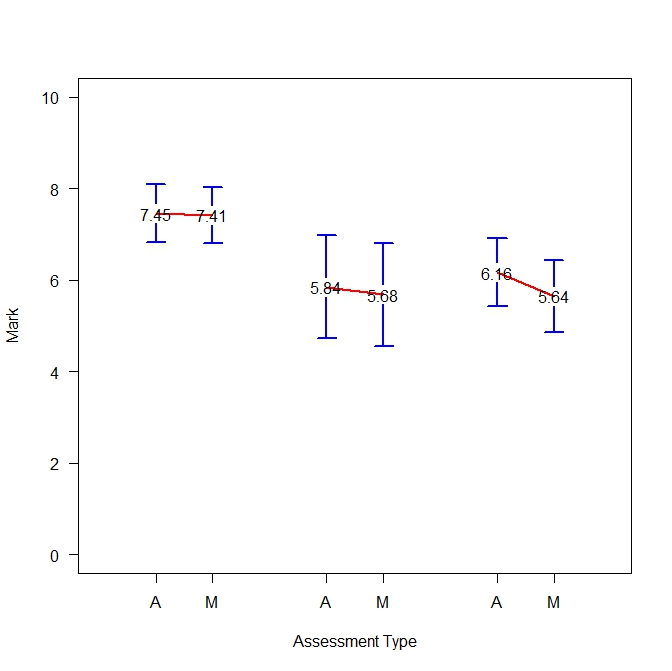

In the figure in the middle we see, for the three teachers, the difference in the average marks obtained when assessing manually or semi-automatically.

The figure at the right shows the distribution of assessment errors made by teachers with a box plot.

- The main causes of the errors were:

- Wrong code introduced by the students in classes not involved in the exercise (and thus, not revised by the teacher and not penalized),

- type errors not affecting the result,

- incorrect use of interfaces,

- code that is correct but it is marked as wrong because it is surrounded by wrong code, 5. messy code very difficult to understand even though it is correct,

- introduction of dead code, and

- teachers’ errors when marking.

- Speed. The assessment is much quicker, and the student gets feedback straight after the exam. In the manual assessment done in the experiment, the students made an exam and, two weeks later, they received their mark in an email. Of course, they could then review the exam with the teacher but, after two weeks, they hardly remembered the details of the exam. So, effectively, the exam was just used to mark students, but not as a learning resource. In contrast, with AA, straight after the exam, the students can self-assess their own exams, know their mark, and correct their own errors, having available a self-corrected version of their own code.

- Fairness. The assessment is fair. Same errors are evaluated equally, and the teachers have no influence on the marking.

- Independence. The assessment of one exam is not affected by the assessment of the previous exams. In the manual assessment, a very good (respectively very bad) exam can subjectively make the teacher to consider the next exam as bad (respectively good) in comparison.